Kamailio Quick Install Guide for v4.4.x – CentOS 7

Are you looking for the CentOS 6 version? It can be found here

This article will provide step-by-step instructions on how to install Kamailio 4.4.x on CentOS 7 using yum packages.

Setup YUM Repository

- Install wget so we can pull down the rpm.

yum install wget - Let’s download the yum repo file for our CentOS version and update the system so yum is aware of the new repository.

cd /etc/yum.repos.d/ wget http://download.opensuse.org/repositories/home:/kamailio:/v4.4.x-rpms/CentOS_7/home:kamailio:v4.4.x-rpms.repo - Update system so yum is aware of the new repository.

yum update - You can look at the kamailio packages in the YUM repository by typing:

yum search kam

Install Kamailio and Required Database Modules

- Install the following packages from the new repo.

yum install -y kamailio kamailio-mysql kamailio-debuginfo kamailio-unixodbc kamailio-utils gdb - Set kamailio to start at boot.

chkconfig kamailio on - The Kamailio configuration files will be owned by the root user, rather than the kamailio user created by the Kamailio package. We will need to change the ownership of these files.

chown -R kamailio:kamailio /var/run/kamailio chown kamailio:kamailio /etc/default/kamailio chown -R kamailio:kamailio /etc/kamailio/ echo "d /run/kamailio 0750 kamailio kamailio" > /etc/tmpfiles.d/kamailio.conf

Install MySQL

- Since we plan on using MySQL, we will need to install the MySQL server as well as the client.

yum install -y mariadb-server mariadb - Next we need to start up MySQL:

systemctl start mariadb - And enable mysqld at boot.

systemctl enablemariadb - Now we can set a root password for mysql:

You can hit yes to all the options. There is no root password as of yet, so the first question will be blank. Be sure to use a secure unique password for your root user.

[root@localhost yum.repos.d]# /usr/bin/mysql_secure_installation

NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MySQL

SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY!

In order to log into MySQL to secure it, we'll need the current

password for the root user. If you've just installed MySQL, and

you haven't set the root password yet, the password will be blank,

so you should just press enter here.

Enter current password for root (enter for none):

OK, successfully used password, moving on...

Setting the root password ensures that nobody can log into the MySQL

root user without the proper authorisation.

Set root password? [Y/n] Y

New password: (enter password)

Re-enter new password: (enter password)

Password updated successfully!

Reloading privilege tables..

... Success!

By default, a MySQL installation has an anonymous user, allowing anyone

to log into MySQL without having to have a user account created for

them. This is intended only for testing, and to make the installation

go a bit smoother. You should remove them before moving into a

production environment.

Remove anonymous users? [Y/n] Y

... Success!

Normally, root should only be allowed to connect from 'localhost'. This

ensures that someone cannot guess at the root password from the network.

Disallow root login remotely? [Y/n] Y

... Success!

By default, MySQL comes with a database named 'test' that anyone can

access. This is also intended only for testing, and should be removed

before moving into a production environment.

Remove test database and access to it? [Y/n] Y

- Dropping test database...

... Success!

- Removing privileges on test database...

... Success!

Reloading the privilege tables will ensure that all changes made so far

will take effect immediately.

Reload privilege tables now? [Y/n] Y

... Success!

Cleaning up...

All done! If you've completed all of the above steps, your MySQL

installation should now be secure.

Thanks for using MySQL!

Configure Kamailio to use MySQL

- By default, Kamailio does not use MySQL. To change this we need to edit one of Kamailio’s configuration files.

vi /etc/kamailio/kamctlrc

- Uncomment the DBENGINE parameter by removing the pound symbol and make sure the value equals MYSQL. The parameter should look like this afterwards:

DBENGINE=MYSQL - To have the

kamdbctlcommand create the mysql database with the correct permissions, we will want to set the databaseusers and passwords inkamctlrc## database read/write user DBRWUSER="kamailio" ## password for database read/write user DBRWPW="kamailiorw" ## database read only user DBROUSER="kamailioro" ## password for database read only user DBROPW="kamailioro"

Create the Kamailio Database Schema

- The Command will create all the users and tables needed by Kamailio. You will be prompted to put in the MySQL root password that you created in the Install MySQL section of this document. You will be asked if you want to install different tables – just say “yes” to all the questions.

/usr/sbin/kamdbctl create - Below are all the prompts you will be presented:

MySQL password for root: '' Install presence related tables? (y/n): y Install tables for imc cpl siptrace domainpolicy carrierroute userblacklist htable purple uac pipelimit mtree sca mohqueue rtpproxy? (y/n): y Install tables for uid_auth_db uid_avp_db uid_domain uid_gflags uid_uri_db? (y/n): y - The following MySQL users and passwords are created (please change these in a production environment).

- kamailio – (With default password ‘kamailiorw’) – user which has full access rights to ‘kamailio’ database.

- kamailioro – (with default password ‘kamailioro’) – user which has read-only access rights to ‘kamailio’ database.

Enable the mysql and auth modules.

Add the following to the beginning of the /etc/kamailio/kamailio.cfg after

#!KAMAILIO#!define WITH_MYSQL

#!define WITH_AUTH

Update the DBURL line to match the username and password you set in kamctlrc before running kamdbctl.

The line looks like this by default:

#!define DBURL "mysql://kamailio:kamailioro@localhost/kamailio"

If you changed the username and password then the new user name and password would look like this

#!define DBURL "mysql://new_username:new_password@localhost/kamailio"

The new_username and new_password fields would be replaced with the values you entered in the /etc/kamailio/kamctlrcfile.

Start the Kamailio Server

service start kamailio

Note, the startup options for Kamailio is located at /etc/default/kamailio

Test Kamailio

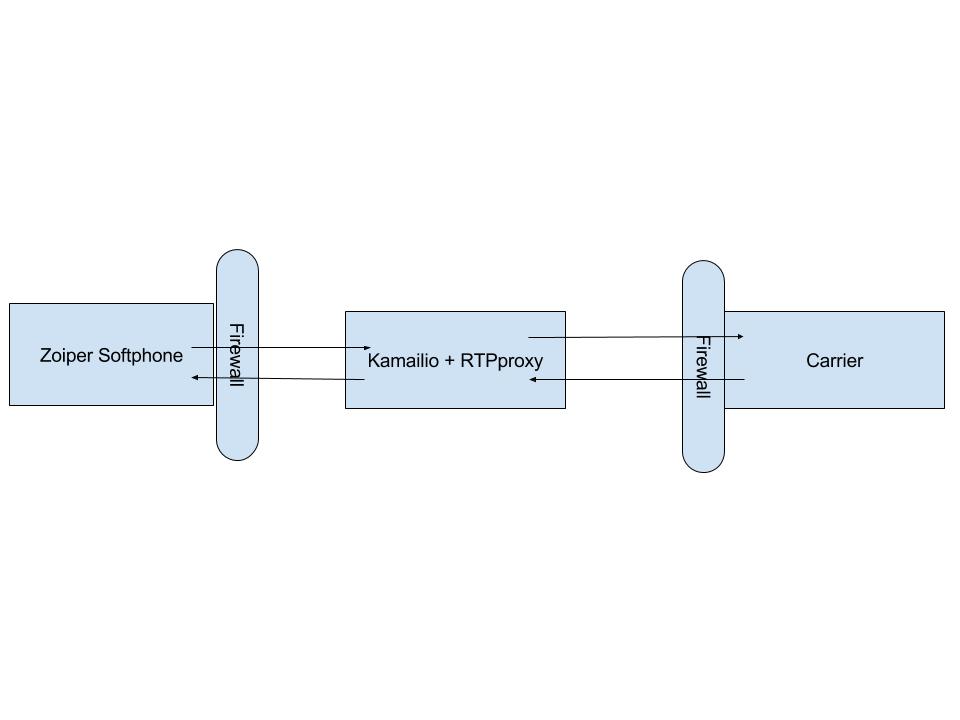

- In order to test that Kamailio is working correctly, I’m going to create a SIP user account and register that account using a softphone such as X-Lite, Linphone, or Zoiper.

Create SIP User Accounts

- The following command will create a new SIP User. Note, that the domain portion has to be specified unless you export the SIP_DOMAIN environment variable.

kamctl add <extension@domain> <extension password> - Here is what I created

kamctl add 1001@dopensource.com opensourceisneat

Registering a SIP Softphone

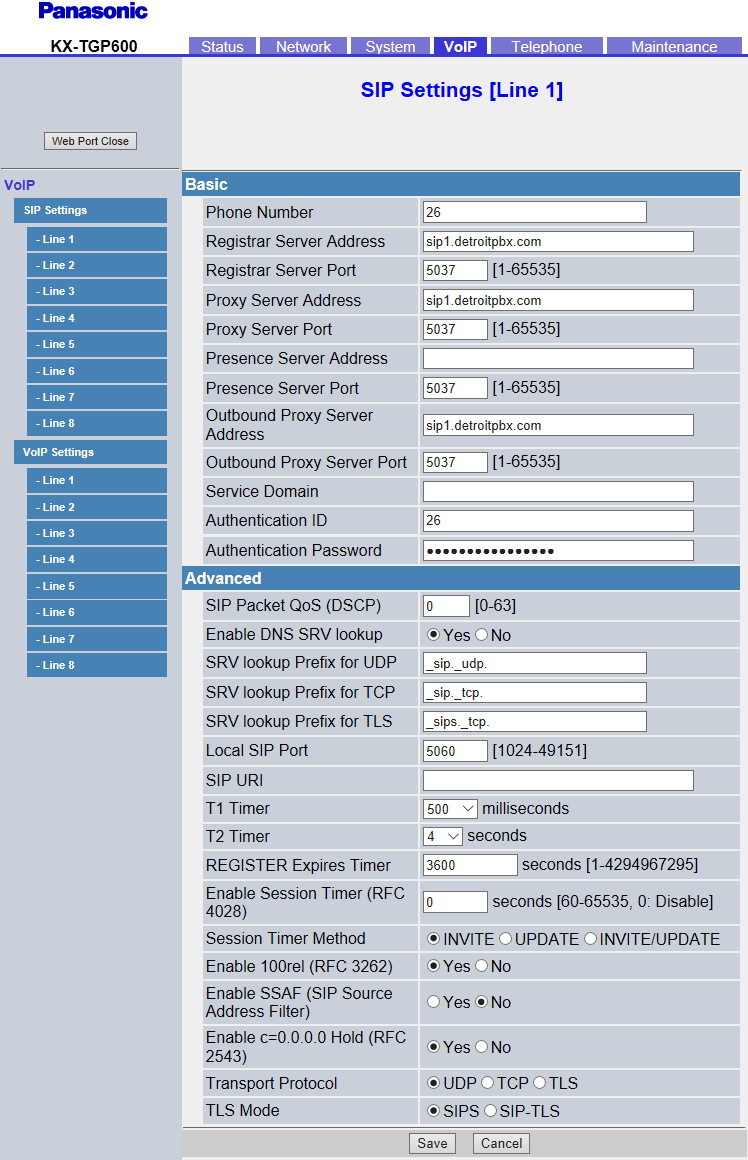

- configure whichever softphone you choose with the following options:

User ID: 1001 Domain: Password: opensourceisneat - Once you are registered, you can view all the registered extensions in kamailio with the following command:

kamctl ul showYou will get something like this: Domain:: location table=1024 records=1 max_slot=1 AOR:: 1001 Contact:: sip:1001@192.168.1.140:40587;rinstance=636c6f6dedce9a2b;transport=UDP Q= Expires:: 3559 Callid:: OWNlYzg2YThmMmI1MGM1YjMyZTk3NjU2ZTdhMWFlN2E. Cseq:: 2 User-agent:: Z 3.3.21937 r21903 State:: CS_NEW Flags:: 0 Cflag:: 0 Socket:: udp:104.131.171.248:5060 Methods:: 5087 Ruid:: uloc-5a2f0176-36a3-1 Reg-Id:: 0 Last-Keepalive:: 1513030025 Last-Modified:: 1513030025

Make a Test Call

- You can call yourself by entering 1001 into your softphone. If it rings then you have a basic Kamailio server installed and ready to be configured to provide load balancing, failover, accounting, etc. As an exercise, you should create another SIP user account and register that user using another softphone and try calling between the two SIP users.

References

- Install And Maintain Kamailio v4.1.x From GIT – http://www.kamailio.org/wiki/install/4.4.x/git