This post is PART 3 of a series that details how to install Apache Directory Studio and OpenLDAP server and connect the two seamlessly. In the last post we went adding users, groups and organizations to your OpenLDAP server. We also went over modifying existing users and groups in your LDAP tree as well and how to query your LDAP database.

We have been using “.ldif” files and a terminal to manage our OpenLDAP tree thus far, but this method (although necessary to start) doesn’t provide a very nice “User Experience” or UX as we call it on the dev side of the house. So lets change that and put a nice Graphical User Interface (GUI) on top of our OpenLDAP system to manage our LDAP tree entries.

A quick note: there is a ton of information on querying your OpenLDAP tree structure that we have not yet touched on. Learning these methods will be vital to managing your users quickly and efficiently. Just to give you a glimpse forward, there are many advanced methods for searching and filtering LDAP queries such as: specifying search base, binding to a DN, specifying scope or search, advanced regular expression matching and filter output fileds, to name a few. We will go over ways to effectively get your data from your OpenLDAP server in a later post.

If you haven’t read PART 1 you can find it here: Installing OpenLDAP

If you haven’t read PART 2 you can find it here: Configuring OpenLDAP

We will now walthrough the installation of Apache Direcotry Studio and how to connect it to your existing OpenLDAP server. The examples below assume that the domain is dopensource.com but, you MUST REPLACE this with your own domain. Lets get started!

Requirements

This guide assumes you have either a Debian-based or cent-os / red-hat based linux distro.

This guide also assumes that you have root access on the server to install the software.

This guide assumes you have followed the steps in PART 1 and PART 2 of the series and have an OpenLDAP server configured with some test users and groups added to your tree.

Installing Apache Directory Studio

Switch to root user and enter your root users pw

Either using sudo:

sudo -i

Or if you prefer using su:

su

Install Oracle Java

Prior to installing Apache Directory Studio (Apache DS) we need to install Oracle Java version 8 or higher. The easiest way to do this is through a well a maintained repository / ppa.

Unistall all old versions of openjdk and oracle jdk:

On a debian based system the cmd would be:

apt-get -y remove --purge $(apt list | grep -ioP '.*open(jre|jdk).*' 'oracle-java.*' '^java([0-9]{1}|-{1}).*' '^ibm-java.*')

On a centos based system the cmd would be:

yum -y erase $(yum list | grep -ioP '(.*open(jre|jdk).*|oracle-java.*|^java([0-9]{1}|-{1}).*|^ibm-java.*)'

Remove old Java environment variables

We also need to cleanup any old java environment variables that may cause issues when we install the new version.

Check your environment for any java variables using this cmd:

env | grep "java"

If there are no matches then you can move on to the next section. Otherwise you need to manually remove those variables from your environment.

Check each one of the following files to see if there are any vars we need to remove. If you get a match remove the line that contains that variable entry (most likely an export statement):

cat /etc/environment 2> /dev/null | grep "java"

cat /etc/profile 2> /dev/null | grep "java"

cat ~/.bash_profile 2> /dev/null | grep "java"

cat ~/.bash_login 2> /dev/null | grep "java"

cat ~/.profile 2> /dev/null | grep "java"

cat ~/.bashrc 2> /dev/null | grep "java"

To remove the line just use your favorite text editor and source that file. For example if .bashrc what the culprit:

vim ~/.bashrc

... remove stuff ...

source ~/.bashrc

Now you are ready to install a recent release of Oracle Java.

Download and install Oracle JDK

For debian based systems there is a group of developers that package the latest versions of Oracle Java and provide it as a ppa repository. This is very convenient for updating Java versions. For centos based systems you will have to download the tar file of the latest release from Oracle’s website and install it manually.

For debian based systems we will add the ppa, update our packages and install the latest release of Oracle JDK.

cat << EOF > /etc/apt/sources.list.d/oracle-java.list

deb http://ppa.launchpad.net/webupd8team/java/ubuntu trusty main

deb-src http://ppa.launchpad.net/webupd8team/java/ubuntu trusty main

EOF

apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys EEA14886

apt-get -y update && apt-get -y install oracle-java8-installer

apt-get install oracle-java8-set-default

For centos based systems go to Oracle’s website here click on accept license agreement and copy the link of the linux .rpm file corresponding to your OS architecture. If you don’t know what architecture your machine is run the following command to find out:

uname -m

Now dowload and install the rpm using the following cmds:

JAVA_URL='the link that you copied'

JAVA_RPM=${JAVA_URL##*/}

cd /usr/local/

wget -c --no-cookies --no-check-certificate \

--header "Cookie: gpw_e24=http%3A%2F%2Fwww.oracle.com%2F; oraclelicense=accept-securebackup-cookie" \

"$JAVA_URL"

rpm -ivh "$JAVA_RPM"

rm -f "$JAVA_RPM"

unset JAVA_URL &&

unset JAVA_RPM

At this point you should have Oracle Java JDK installed on your machine. Verify with the following cmds:

java -version

javac -version

You should see output similar to the following:

[root@box ~]# java -version

java version "1.8.0_131"

Java(TM) SE Runtime Environment (build 1.8.0_131-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.131-b11, mixed mode)

[root@box ~]# javac -version

javac 1.8.0_131

If you get an error here you may need to uninstall any dependencies on your system that are linked to the old java version and redo the process. One example may be libreoffice (often installed by default and depends on default java installation) which we could remove with following:

Debian based systems:

apt-get -y remove --purge '.*libreoffice.*'

Centos based systems:

yum -y remove '.*libreoffice.*'

For those with errors; after removing any packages that depended on the old versions of java, go back through the installation steps outlines above and you should be able to print out your java version.

Set Java environment vars for new version

First we will set our environment variables using a script we will make here: /etc/profile.d/jdk.sh

cat << 'EOF' > /etc/profile.d/jdk.sh

#!/bin/sh

#

# Dynamic loading of java environment variables

# We also set class path to include the following:

# if already set the current path is included,

# the current directory, the jdk and jre lib dirs,

# and the shared java library dir.

#

export JAVA_HOME=$(readlink -f /usr/bin/javac 2> /dev/null | sed 's:/bin/javac::' 2> /dev/null)

export JRE_HOME=$(readlink -f /usr/bin/java 2> /dev/null | sed 's:/bin/java::' 2> /dev/null)

export J2SDKDIR=J2REDIR=$JAVA_HOME

export DERBY_HOME=$JAVA_HOME/db

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin:$DERBY_HOME/bin

export CLASSPATH=$CLASSPATH:.:$JAVA_HOME/lib:$JRE_HOME/lib:/usr/share/java

EOF

After our script is created make it executable, and then source the main profile.

chmod +x /etc/profile.d/jdk.sh

source /etc/profile

You should now see the environment variables we added above when running this cmd:

env | grep -oP '.*(JAVA_HOME|JRE_HOME|J2SDKDIR|J2REDIR|DERBY_HOME|CLASSPATH).*'

Lastly we are going to enable the Java client for our web browsers:

Close all instances of firefox and chrome:

kill all firefox

kill all chrome

Grab the plugin from your Oracle Java install and link it:

JAVA_PLUGIN=$(find ${JRE_HOME}/ -name 'libnpjp2.so')

mkdir -p /usr/lib/firefox-addons/plugins && cd /usr/lib/firefox-addons/plugins

ln -s ${JAVA_PLUGIN}

mkdir -p /opt/google/chrome/plugins && cd /opt/google/chrome/plugins

ln -s ${JAVA_PLUGIN}

unset JAVA_PLUGIN

cd ~

Install the Apache Directory Studio suite

Download either the 32bit or 64bit (depending on you OS) version of Apache DS with the following:

APACHE_DSx64_URL="http://www-eu.apache.org/dist/directory/studio/2.0.0.v20161101-M12/ApacheDirectoryStudio-2.0.0.v20161101-M12-linux.gtk.x86_64.tar.gz"

APACHE_DSx32_URL="http://www-eu.apache.org/dist/directory/studio/2.0.0.v20161101-M12/ApacheDirectoryStudio-2.0.0.v20161101-M12-linux.gtk.x86.tar.gz"

APACHE_DS_URL="the url for your os architecture"

APACHE_DS_TAR=${APACHE_DS_URL##*/}

cd /usr/local/

wget --no-cookies --no-check-certificate "$APACHE_DS_URL"

tar -xzf "$APACHE_DS_TAR" && rm -f "$APACHE_DS_TAR" && cd ApacheDirectoryStudio

Now you can start the gui with the following cmd:

./ApacheDirectoryStudio

If you are configuring this on a remote server (like I was) you will need to set the ssh configs properly to forward X-applications (GUI / display) over to your local machine. If you are configuring the server locally and have a display you can skip this step.

Enable X11 forwarding over ssh on the remote server:

sed -ie '0,/.*X11Forwarding.*/{s/.*X11Forwarding.*/X11Forwarding yes/}; 0,/.*X11UseLocalhost.*/{s/.*X11UseLocalhost.*/X11UseLocalhost yes/}' /etc/ssh/sshd_config

Now disconnect and allow your local machine’s ssh client to receive the forwarded display:

sed -ie '0,/.*ForwardX11.*/{s/.*ForwardX11.*/ForwardX11 yes/}; 0,/.*ForwardX11Trusted.*/{s/.*ForwardX11Trusted.*/ForwardX11Trusted yes/}' /etc/ssh/ssh_config

Then establish a an ssh connection with remote X11 forwarding enabled and run it:

ssh -Y -C -4 -c blowfish-cbc,arcfour 'your remote user@your remote server ip'

cd /usr/local/ApacheDirectoryStudio

./ApacheDirectoryStudio

You should now see the GUI appear. Lets connect to our OpenLDAP server.

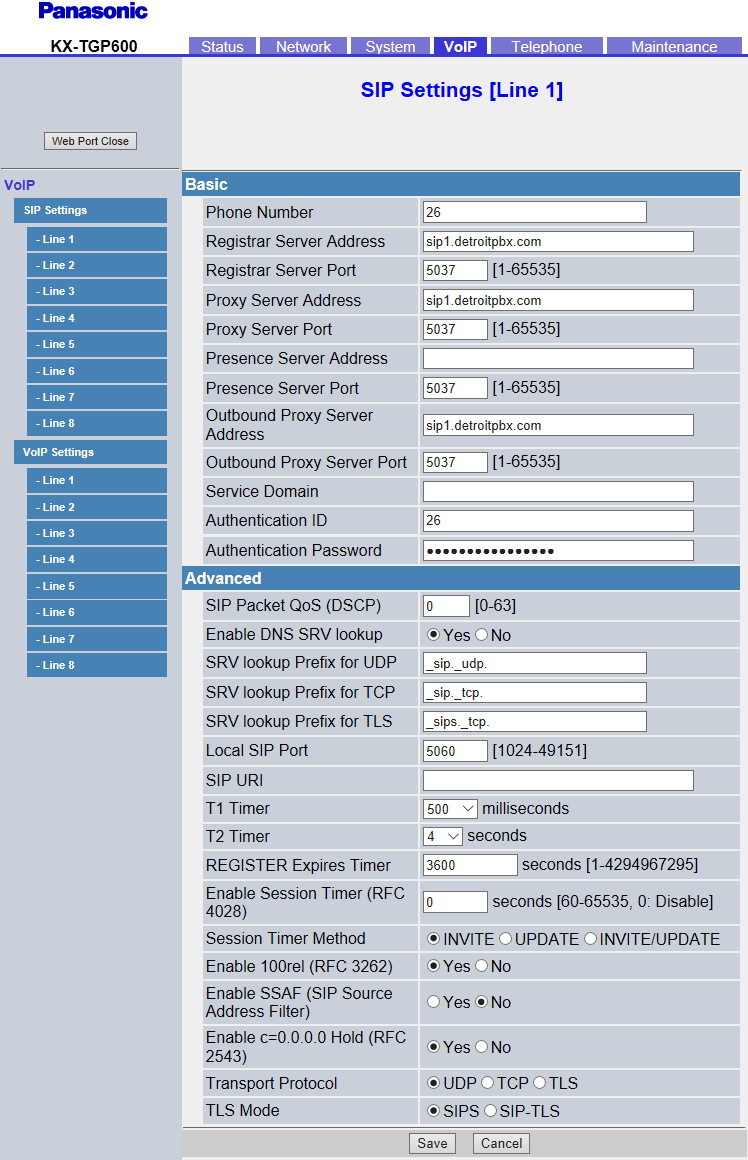

Click on the buttons File –> New –> LDAP Browser –> LDAP Connection>

Enter a name for your connection and enter the hostname of the LDAP server (you can find it using the **hostname** cmd)

Make sure to check your connection to the server by pressing the “Check Network Parameter” button before moving on. If you get an error, verify connectivity to the OpenLDAP server and port that your entered and try again.

Enter the LDAP admin’s Bind DN and password. From our example in the first blog post it would look something like this:

Bind DN or user: cn=Manager,dc=dopensource,dc=com

Bind Password: ‘ldap admin password’

Make sure to click the “Check Authentication” button and verifiy your credentials before moving on.

Enter your Base DN, for our example this was: **dc=dopensource,dc=com**

Check the feature box “Fetch Operational attributes while browsing”

You can now click “finish” and your connection to your OpenLDAP server through Apache DS is complete.

To view your DN and to manage the entries we made earlier:

click the LDAP icon on the left and it will bring up the sidbar for LDAP

Alternatively you can navigate to your LDAP tree by clicking window –> show view –> ldap browser

Congratulations you have set up your Apache DS front-end application to access your OpenLDAP server!

For More Information

For more information, or for any questions visit us at:

dOpenSource

Flyball Labs

For Professional services and Ldap configurations see:

dOpenSource OpenLDAP

dOpenSource OpenLDAP Services

If you have any suggestions for topics you would like us to cover in our next blog post please leave them in the comments 🙂

Written By:

DevOpSec

Software Engineer

Flyball Labs

— Keep Calm and Code On —